Back in August, we took a test spin with Jasper, an artificial intelligence (AI) content writing program that had been (and still is) aggressively advertised as a “revolutionary” tool in creating online marketing content.

We were extremely underwhelmed by its ability to provide anything more than a rudimentary and basic overview of common aspects of the law. “Jasper is a really cool idea,” we said. “We’d be willing to take another look in maybe a decade or so to see where it’s at.”

We just tried out ChatGPT and my, how quickly a decade passes!

Hey ChatGPT: How is the Negligence of Children Handled in Missouri?

ChatGPT’s response:

In Missouri, the negligence of children is typically handled differently than the negligence of adults, due to the fact that children may not have the same capacity for judgment and responsibility as adults.

Under Missouri law, children under the age of 7 are generally considered to be legally incapable of negligence, which means they cannot be held responsible for their actions. Children between the ages of 7 and 12 are presumed to be incapable of negligence, but this presumption can be overcome if the defendant can show that the child had the capacity to understand the consequences of their actions.

For children over the age of 12, they are held to the same standard of care as an adult in similar circumstances. This means that they can be held liable for their negligence if they fail to act as a reasonable person would under similar circumstances.

In cases involving children, the court will consider the child’s age, maturity, and experience when determining whether they acted negligently. The court will also consider whether the child was engaged in an activity that was appropriate for their age and level of experience.

Overall, the handling of negligence of children in Missouri depends on their age and capacity to understand the consequences of their actions. It is important to consult with an experienced personal injury attorney if you are involved in a case where the negligence of a child may be at issue.

Whoa.

Unlike Jasper, which needed constant urging, ChatGPT typed up this 239-word passage on its own, in one flow of writing, with no nudges to make it delve into the subject matter.

Is it correct? No. “The fault of a child should be determined by the fact-finder in each case, based upon that degree of care exercised by children of the same or similar age, judgment, and experience. Only if the child is so young or the evidence of incapacity so overwhelming that reasonable minds could not differ on the issue, should trial courts rule as a matter of law… that the child cannot be capable of fault.” (Lester v. Sayles, 850 S.W. 2d 858 (Mo. 1993)).

Is it obviously incorrect? No. ChatGPT’s answer smelled very close to the bright-line “Rule of 7s” that lots of courts use when dealing with young tortfeasors or victims who brought fault to the accident that hurt them. However, ChatGPT doesn’t provide citations or links to its answers. We had to do some legal research on our own to find that Missouri was not one of those states. In so doing, though, we found that the online material that ChatGPT likely had to read through was far from ideal: A Google search of the prompt that we gave to ChatGPT spat back all sorts of off-topic issues, from child abuse to suing on a child’s behalf to parental responsibility to the tolling of the statute of limitations for children in a personal injury case.

The fact that it got as close to what we were looking for is fairly impressive.

And disturbing.

Then we looked at the writing style of ChatGPT’s output. Unlike Jasper, it was not overwhelmingly apparent that it was the result of predictive typing.

That made us wonder… how well it would do against AI-detecting tools and other plagiarism detectors?

AI Detectors are Fallible…

With the sudden proliferation of AI-generated content and the predictably-immediate use of them to write term papers, up sprang another online industry: Programs that claimed they could detect AI-written content.

We copied the passage that ChatGPT gave us about the negligence of minors and pasted it, untouched, in the first 4 that came up in a Google search for “AI content checker.” The results (Disclaimer: This is not a recommendation or a negative review of any of these tools, just an isolated use):

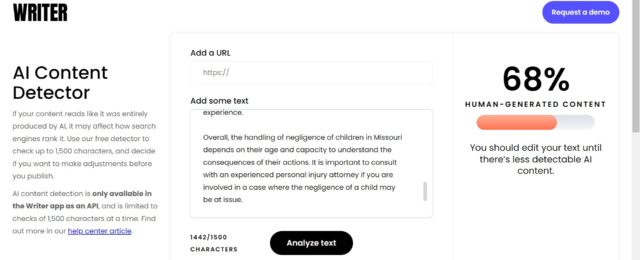

- Writer.com – 68% is human-generated

- Copyleaks.com – 99.9% sure that the content is AI-generated

- Crossplag.com – 100% written by AI

- ContentAtScale.com – A “human content score” of 25%, with sub-scores of:

- Predictability: 12%

- Probability: 22%

- Pattern: 43%

3 out of 4 saw through it, but that’s not ideal. We would have hoped that a blatant copy/paste of AI content would get red flagged at 100% across the board, rather than by only half of them.

Which of course raised the question: How easily can we fool them?

… And Easily Broken

We made the following, non-substantive edits to the passage that ChatGPT provided:

In Missouri, the negligence of children is typically handled differently than the negligence of adults, due to the fact that as children may not have the their same capacity for judgment and responsibility as adults.

Under Missouri law, children under the age of 7 are generally considered to be legally incapable of negligence, which means they and cannot be held responsible liable for their actions. Children between the ages of 7 and 12 are presumed to be incapable of negligence, but though this presumption can be overcome if the defendant can show that the child had the capacity to understand the consequences of their actions.

For children over the age of Children over 12, they are held to the same standard of care as an adult in similar circumstances. This means that they can be held liable for their negligence if they fail to act as a reasonable person would under similar circumstances.

In cases involving children, the court will consider the child’s age, maturity, and experience when determining whether they acted negligently. The court will also consider whether the child was engaged in an activity that was appropriate for their age and level of experience.

Overall, the handling of negligence of children in Missouri depends on their age and capacity to understand the consequences of their actions. It is important to consult with an experienced personal injury attorney if you are involved in a case where the negligence of a child may be at issue.

This produced some movement:

- Writer.com – 78% is human-generated

- Copyleaks.com – 99.4% sure that the content is AI-generated

- Crossplag.com – 100% written by AI

- ContentAtScale.com – A “human content score” of 34%, with sub-scores of:

- Predictability: 32%

- Probability: 27%

- Pattern: 43%

But then we had an idea. We used the exact same passage as above, but added something that we have never seen an AI do before.

We made a typo: “…cannot be meld liable for their actions.”

And will you look at that!

- Writer.com – 100% is human-generated

- Copyleaks.com – 76.7% sure that the content is human-generated (a 76.1% swing!)

- Crossplag.com – 68% written by AI

- ContentAtScale.com – A “human content score” of 54%, with sub-scores of:

- Predictability: 53%

- Probability: 32%

- Pattern: 75%

How fitting.

If there’s one thing we learned in torts class, it was that humans can be counted on to make mistakes.

But does it work the other way around? Can human-written content get mislabeled as AI-generated?

Yes.

We copied and pasted the content from a few of the old, pre-AI, blog posts from our site, ran them through these AI detectors, and got the following results:

- The Limitations of Keywords: Diversifying Your Content (July 18, 2016)

- Writer.com – 100% is human-generated

- Copyleaks.com – 87.7% sure that this is human-generated

- Crossplag.com – 2% written by AI

- ContentAtScale.com – A “human content score” of 97%, with sub-scores of:

- Predictability: 89%

- Probability: 100%

- Pattern: 100%

- Legal Blogging and Navigational Queries (March 31, 2016)

- Writer.com – 100% is human-generated

- Copyleaks.com – 81.7% sure that this is human-generated

- Crossplag.com – 1% written by AI

- ContentAtScale.com – A “human content score” of 94%, with sub-scores of:

- Predictability: 81%

- Probability: 100%

- Pattern: 100%

- Misinformation in Online Marketing Studies (March 6, 2017)

- Writer.com – 100% is human-generated

- Copyleaks.com – 85.2% sure that this is human-generated

- Crossplag.com – 1% written by AI

- ContentAtScale.com – A “human content score” of 97%, with sub-scores of:

- Predictability: 91%

- Probability: 100%

- Pattern: 100%

- The Double-Edged Sword of Google’s Lengthened Meta Descriptions (December 25, 2017)

- Writer.com – 100% is human-generated

- Copyleaks.com – 84.8% sure that this is human-generated

- Crossplag.com – 1% written by AI

- ContentAtScale.com – A “human content score” of 96%, with sub-scores of:

- Predictability: 86%

- Probability: 100%

- Pattern: 100%

Given that these articles were all written before AI was out of the proverbial evolutionary soup, the fact that we are seeing any uncertainty at all shows how little we can rely on these tools.

It is also pretty clear that Copyleaks’ tool is very eager to see AI in online content, while Writer.com’s really wants to see the human side of things.

So, What Does This Mean for Your Online Legal Content Marketing?

The most important thing to do is calm down.

Yes, as professional legal content writers, we can safely say that ChatGPT has set the industry on fire and blown up the market. Unlike Jasper’s content back in August, ChatGPT’s output was not readily rejectable: Instead, it looked and read like loads of other content that you see online every day, and was the epitome of cheap.

It was, however, still legally incorrect. What it said was the law in Missouri was not, in fact, the law in Missouri. Close, but not quite.

Firing your legal writers or writing your next post in ChatGPT and then copying it to your law firm’s site is likely unwise, or at least preemptive.

However, the inability of AI-detecting tools to reliably pick up AI-generated content is disconcerting. As we’ve shown, writers can easily get them to label predominantly AI content as human-written with a couple of tweaks and a typo, and human-generated content can be mislabeled as potentially written by AI.

Given that Google has waffled about its stance on AI-generated content – shifting from being strongly against it to publishing guidelines with headlines that include “Rewarding high-quality content, however it is produced” – it can be easy to jump to conclusions and make bold decisions about how to move forward.

Our advice: Don’t.

Let the market resolve itself. Rollover your online marketing budget to next month if you’re really worried about it. But don’t scrap everything that you’ve done so far and switch over to AI content writers, yet. At least not until you’re ready to fact-check everything that comes out of them, until we know for sure that search engines won’t be penalizing AI-generated content in the future, and until we know that AI programs can produce articles that actually rank well (we don’t know that, and we at Myers Freelance doubt that they ever will, particularly for competitive searches).

The times they are a-changing. You can try to get ahead of the curve, but honestly, so much can happen at this point that we think the better course of action would be to sit back and let things play out for a couple of weeks.